2021 / iOS + AN + WEB / SHIPPED

User Safety Controls

Defining safety for a global community of 90 million+ readers and writers.

Overview

Over COVID, Wattpad saw a large spike in new users, and with that came a large spike in hate & harassment tickets that operationally overloaded our Support team. We wanted to take this as an opportunity to build out a long overdue feature we had been advocating for — the ability to block users. But, we quickly realized that Block couldn’t be the only solution for tackling our user and business problems.

We needed to take a holistic approach to defining safety on Wattpad that protected not only our users, but our internal teams on the frontline as well.

Team: Audience Trust

Product manager, Frontend + Backend engineers, Trust & Safety champions, Community Wellness team, Data champion

Role: Product strategy, UX flows & high-fidelity designs, Interaction design, Prototyping, Stakeholder management, QA

Our team's mission for 2021 was to help users protect themselves from unwanted interactions.

﹏

The success metric:

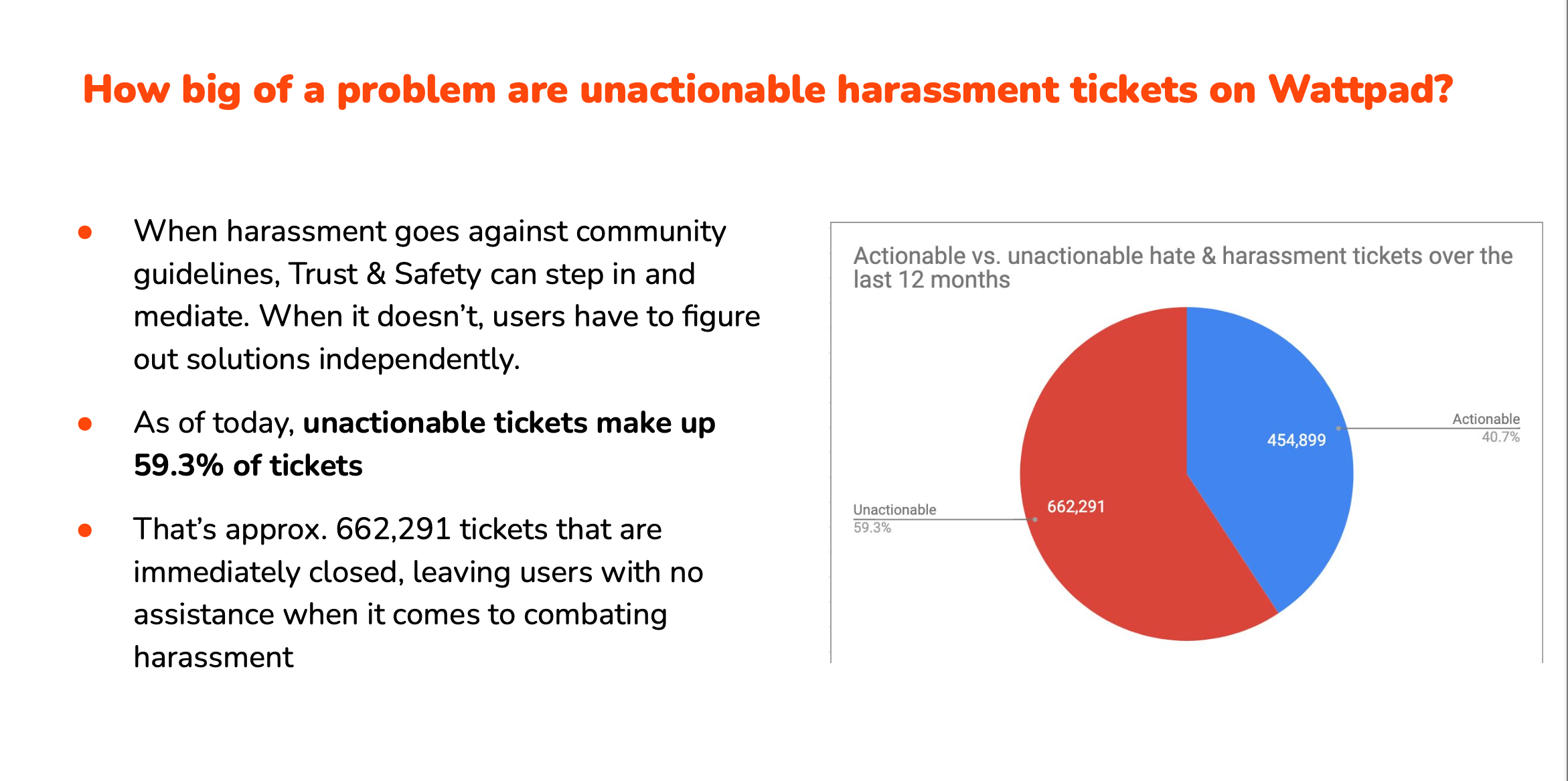

The proxy for a reduction in harassment on the platform is a reduction in the # of unactionable hate & harassment tickets that our Support team receives.

Understanding the problem space: Harassment on Wattpad

To kick off the discovery process we first needed to get a good understanding of the current landscape of harassment on Wattpad. We set out to understand which negative interactions that have the greatest risk/negative impact to our community and business & which interactions generate the most unactionable tickets.

1. Consulting the experts: We worked very closely with our stakeholders from our Trust & Safety (T&S) and Community Wellness (CW) teams to understand the current landscape of user safety on Wattpad. Some things we wanted to know were:

- What does Wattpad consider to be harassment?

- Which user segments receive the most bullying/toxic interactions - writers or readers?

- What is an example of an unactionable hate & harassment report?

- What operational issues were the team facing when dealing with hate & harassment tickets?

Discussing with Trust & Safety, we decided to use unactionable Hate & Harassment tickets as a metric for harassment on Wattpad. This metric was a signal for issues that users could have solved on their own if the right safety tools were provided.

2. Working with data:

I had mapped out where social interactions happen across our product (Story comments, Direct Messages, Profile posts, etc.). To better understand the highest risk areas, I wanted to identify the volume of interactions that each of these product areas garnered. During this process I also outlined the existing safety features in these product areas that users had to protect themselves (ex. Report a comment, mute a user, etc.).

Working with the Analytics team, we discovered that Story comments have 700 million interactions/month with second being Profile posts at 6M/month. This narrowed our direction for where we could provide the most value.

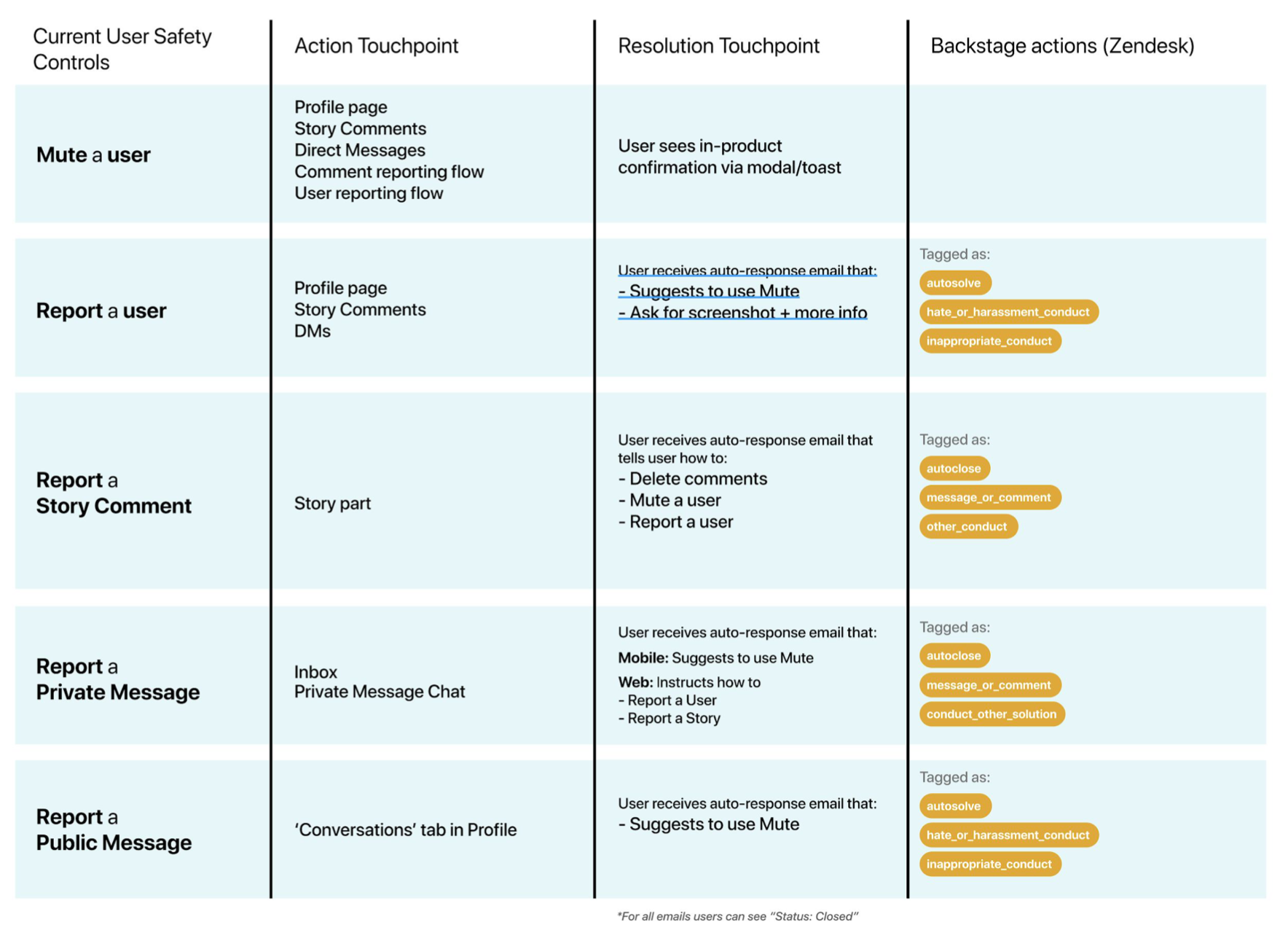

I created an overview of the landscape of our safety tools we offer to users and how it gets tagged in Zendesk. From this map, we could explore opportunity areas to help users stay safe.

3. Survey + interview data:

We analyzed data from our monthly Sentiment Survey & App Reviews to pull out the top pain points around safety for our users. In addition, interviews were conducted with 6 of our top writers to see if there were specific pain points our most valuable user segment were facing. The major themes of our users' pain points with platform safety were:

What prevents users from feeling safe?

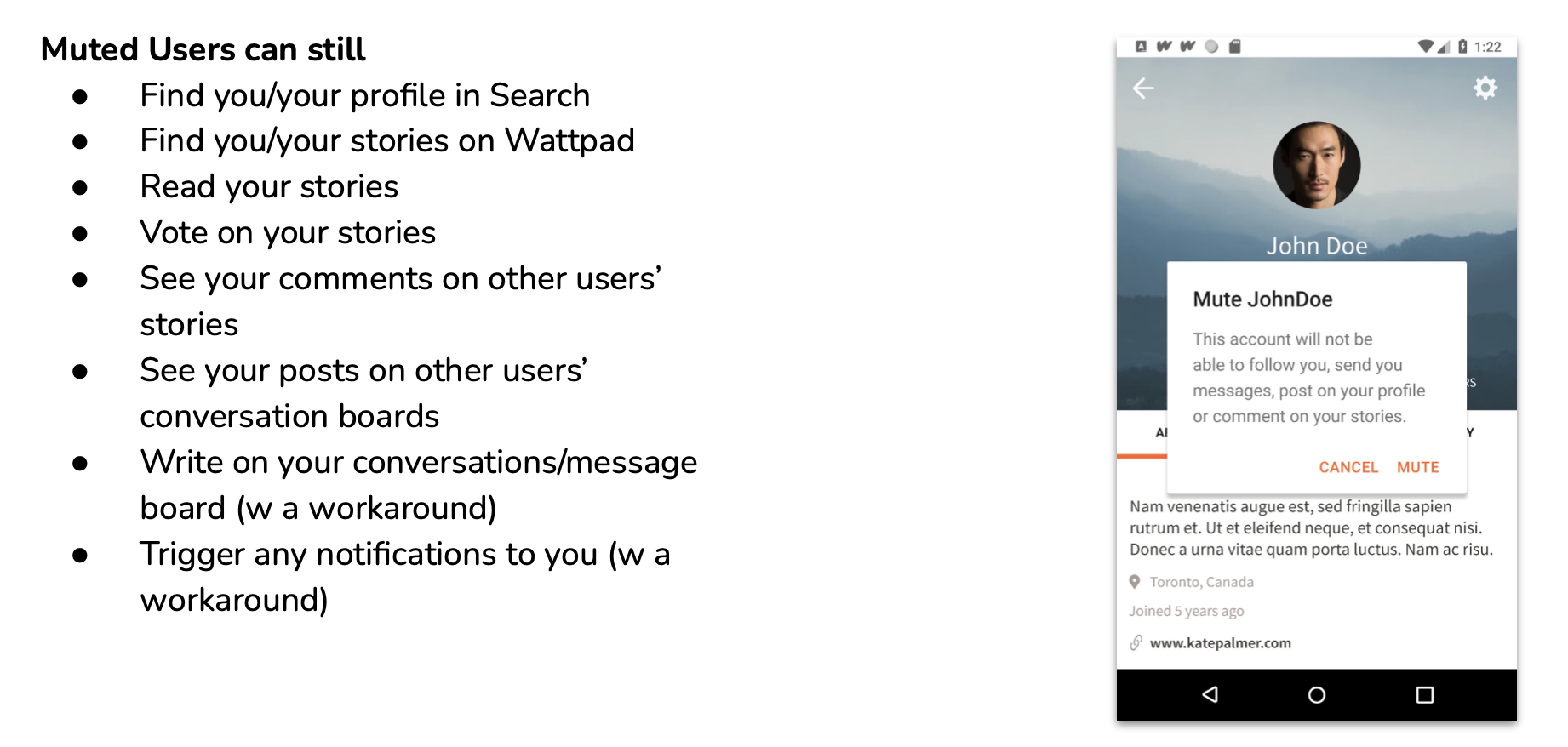

- Users are frustrated that our current tools don’t sufficiently protect them from harassment (Mute doesn't fully work).

- Users feel frustrated by lack of resolution when they submit a ticket.

- Users feel like they’re not being heard, their concerns aren't being taken seriously, and that there is no urgency in addressing their response.

- Writers want ways to be able to disallow certain users from accessing their stories.

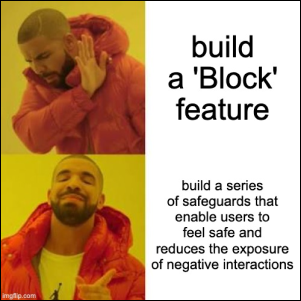

After better understanding what safety means to our users, we realized the solution wasn't as simple as releasing a Block feature.

What we were really building was series of safeguards that enabled users to feel safe while reducing the frequency of negative interactions. This could either be reactive (a Block feature) or proactive (anti-bullying marketing campaign).

At the project kick-off, the rest of the team was excited to get started on the Block feature. I presented the synthesized research and explained why building the Block feature wasn't the silver bullet solution that we believed it to be.

After presenting the research at the project kick-off, the team was aligned: the goal wasn't to build a Block feature. We needed to be grounded in our use cases and prioritize them based off of where the biggest opportunities were. The outcome was to establish higher user sentiment of platform safety and reduce bullying tickets.

Understanding the Opportunities

After aligning the team on the core user problem areas, we covered the safety tools we currently offer our users. This included the ability to report story comments, private & public messages, and users. We also had a Mute feature BUT it wasn’t fully built out and did not sufficiently protect users from harassers. This was one of the core issues users mentioned in our sentiment survey.

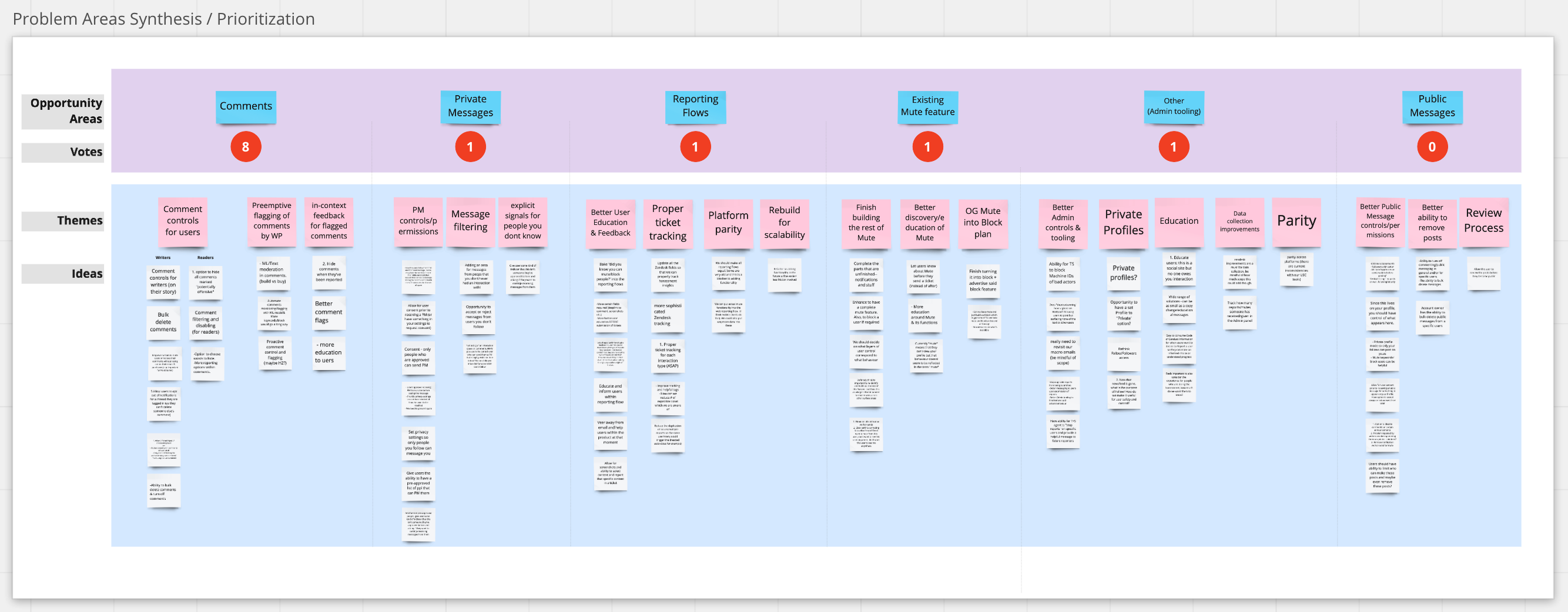

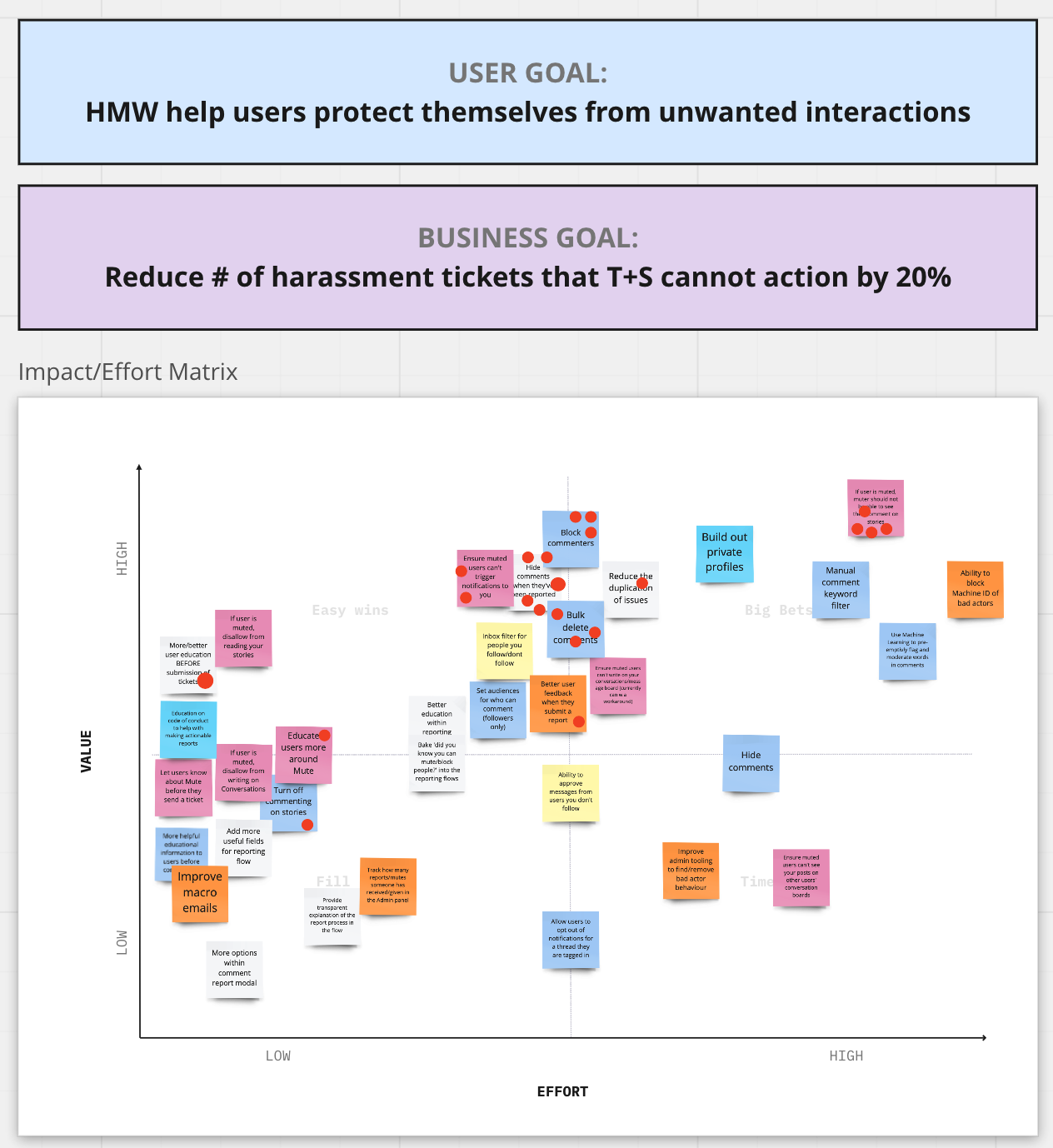

Ideation Session

With a good grasp of the core user problems around safety & what the gaps are in our current safety tools, I organized a workshop to ideate and prioritize solutions cross-functionally. Our engineers, along with our Trust & Safety and Community Wellness manager also participated.

One of the biggest gaps in our Mute feature was that users could still see comments from muted users. In the workshop, the team chose hiding user comments as the first solution to ship. It was a low(ish) lift project with high payoff, given the volume of interaction that happens in Story comments.

The other prioritized solutions were adding an option within the Report flow to funnel unactionable harassment tickets away from T&S, introducing in-context educational tips into the reporting flow, and Story Block - disabling certain users from accessing writer's stories.

Solutions we shipped! 🎉

﹏

Comment Muting

- Now, when you mute a user, you won’t see their comments on any stories, and they won’t see yours.

- Users can now protect themselves in the largest area of interaction on Wattpad (~700M+ comments/month).

- Mute now covers all interaction areas (Private messages, Public Conversations, Comments).

- If you choose to unmute a user, all previous comments reappear.

Process highlights:

• Conducting competitive anaylsis to see what other large social media platforms were doing.

• Mapping out all user stories/use cases.

• Working with engineers to refine interaction for hiding muted comments.

• Organizing a company bug bash

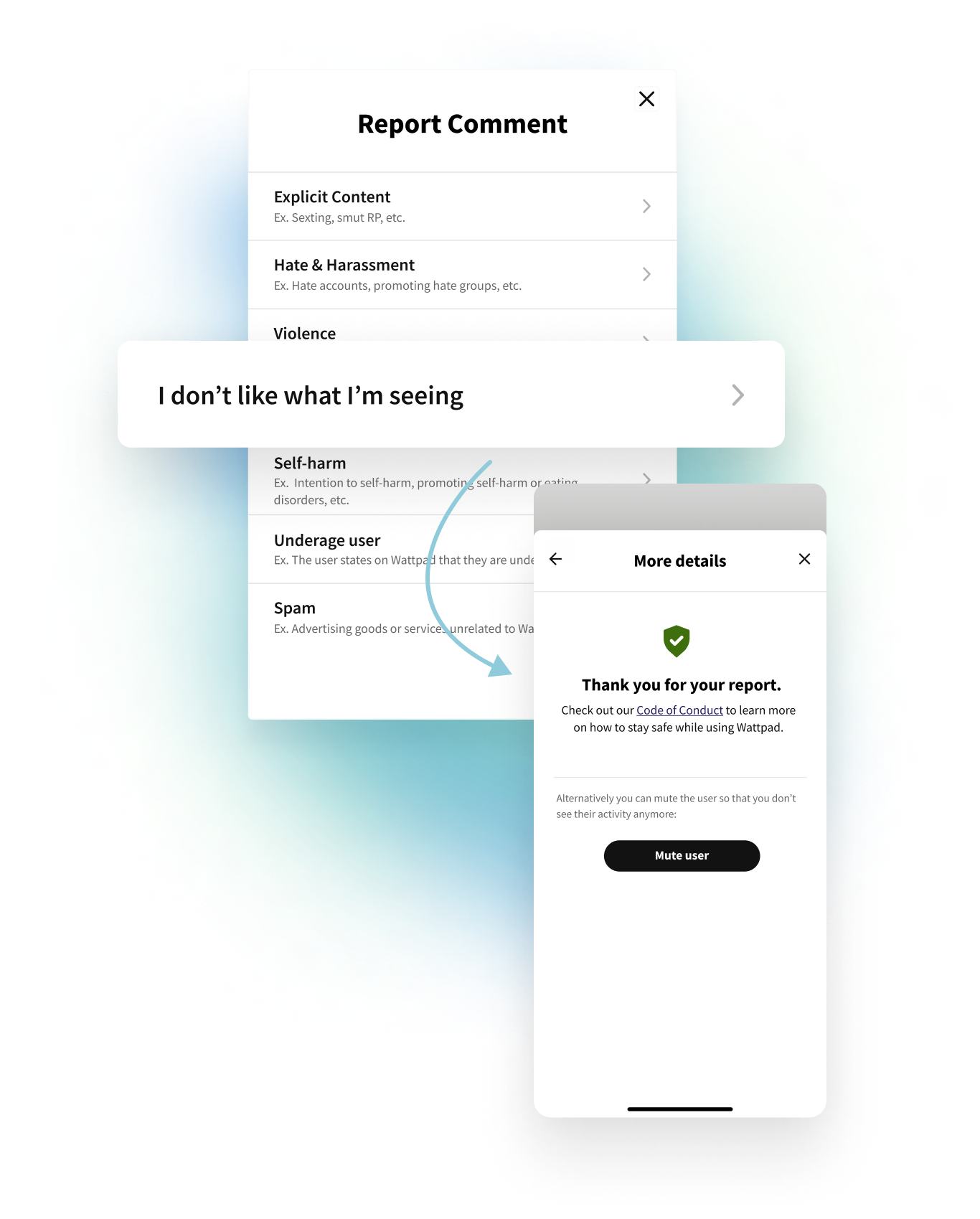

"I don't like what I'm seeing" Report Option

- This option is for users to report comments that they don’t like seeing but also don’t violate guidelines. A big portion of our unactionable harassment tickets were from use cases like this.

- Ex. "This person doesn’t support my HarryxLiam ship, I’m going to report them."

- Adding this option helps reduce unactionable tickets for our Support team by funnelling the use case away from filing a Harassment ticket.

- An updated confirmation screen was added. The screen included a link to the Code of Conduct, with the goal of making platform rules more accessible, as well as a Mute user button.

- Previously inconsistent designs and copy were updated and unified across iOS, Android, and Web.

Process highlights:

• Competitive analysis

• Working with our Community Wellness/Support team to set up how it's tracked in Zendesk

Final Thoughts

At the start of the quarter, we were eager to start working on the Block feature, thinking it was exactly what users had been wanting. But by reassesing what was most important for both the users and the business, we were able to develop features that enhanced user safety and eased the Support team's workload. In just a few weeks, we had over 2.5 million instances of muting. Over 250,000 users had a muted list of 1-10 users. I'm incredibly proud to have been a part of this project and found it truly fulfilling to contribute to building out safety on Wattpad.

﹏

Currently open to opportunities! 🍵

Let's chat about the amazing things we can build together.

Reach me at:

© jess lee 2022

Site built with ❤️+🫠+🧋 (+ Semplice)